Provided function asynchronously, handing you back a future result of that function Val d: Duration = 10.seconds Using Futures For Non-blocking Computationīasic use of futures is easy with the factory method on Future, which executes a

By using range() method to generate an array containing a sequence of increasing integers in a given range. It is a fixed size data structure that stores elements of the same data type. Operations often require a duration to be specified. Array is a special kind of collection in Scala.

#Scala array how to

This article shows how to convert a JSON string to a Spark DataFrame using Scala. This allows the caller of the method, or creator of the instance of the class, to decide whichįor typical REPL usage and experimentation, importing the global ExecutionContext is often desired. A JSON value can be an object, array, number, string, true, false.

Or class M圜lass(myParam: MyType)( implicit ec: ExecutionContext) Request one from the caller by adding an implicit parameter list: def myMethod(myParam: MyType)( implicit ec: ExecutionContext) = …

#Scala array code

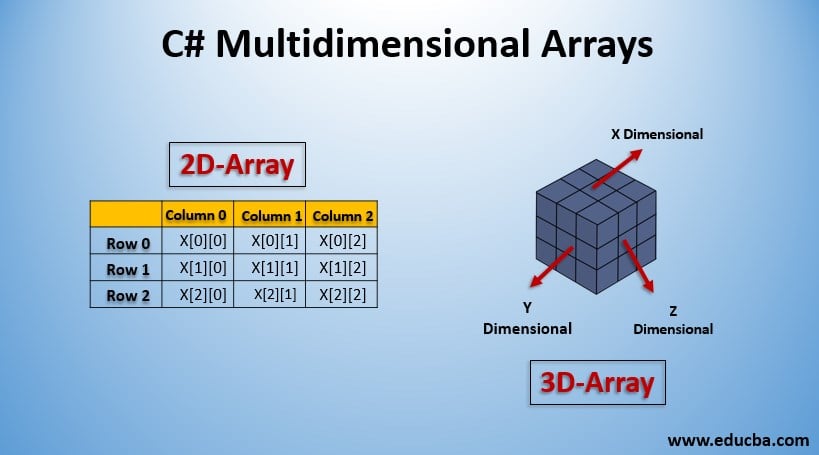

If the code in question is a class or method definition, and no ExecutionContext is available, The general advice for these implicits are as follows. When using things like Futures, it is often required to have an implicit ExecutionContext In Scala, we build 1 to 5 dimensional arrays with the Array.ofDim function. In advanced physics, more dimensions are needed to understand our universe. For large ones, a more memory-efficient representation is needed. For small regions, nested collections are helpful. When working with Futures, you will often find that importing the whole concurrent In Scala we can create 2D spaces with nested lists or tuples. GuideĪ more detailed guide to Futures and Promises, including discussion and examples This package object contains primitives for concurrent and parallel programming. For example, on the JVM, String is an alias for . It is an index based data structure which starts from 0 index to n-1 where n is length of array. Other aliases refer to classes provided by the underlying platform. scala> var anew ArrayInt (3) //This can hold three elements. Some of these identifiers are type aliases provided as shortcuts to commonly used classes. We can create an array in Scala with default initial elements according to data type, and then fill in values later. Identifiers in the scala package and the scala.Predef object are always in scope by default.

#Scala array install

To make third-party or locally-built Scala libraries available to notebooks and jobs running on your Azure Databricks clusters, you can install libraries following these instructions: Install Scala libraries in a cluster. I am a newbie to functional programming language and I am learning it in Scala for a University project. The scala package contains core types like Int, Float, Array Databricks runtimes provide many libraries. Tags: arrays functional-programming scala. This is the documentation for the Scala standard library.

0 kommentar(er)

0 kommentar(er)